In part 1 of this series, we took a look inside the ODA to see what the OS was doing. Here, we'll dig in a little further to the disk and storage architecture with regard to the hardware and ASM.

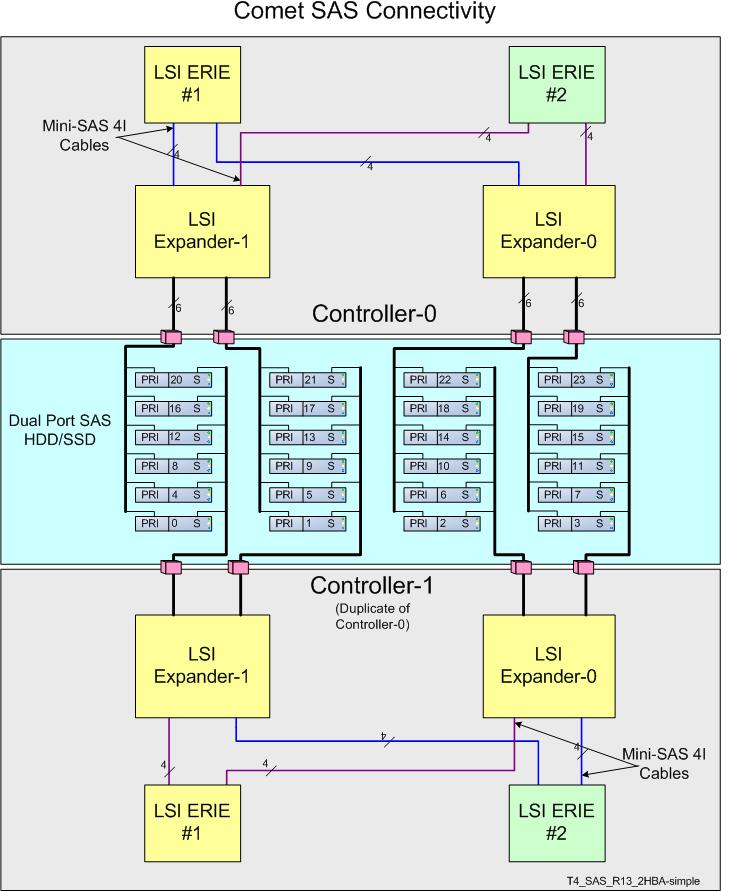

There have been a lot of questions about the storage layout of the shared disks. We'll start at the lowest level and make our way to the disks as we move down the ladder. First, there are 2 dual-ported LSI SAS controllers in each of the system controllers (SCs). They are each connected to a SAS expander that is located on the system board. Each of these SAS expanders connect to 12 of the hard disks on the front of the ODA. The disks are dual-ported SAS, so that each disk is connected to an expander on each of the SCs. Below is a diagram of the SAS connectivity on the ODA (Note: all diagrams are collected from public ODA documentation, as well as various ODA-related support notes available on My Oracle Support).

From this, you can see the relationship between the SAS controllers, SAS expanders, and SAS drives on the front end. If you look at the columns of disks, the first 2 columns are serviced by one expander, while the third and fourth columns are services by the other expander. What the diagram refers to as "Controller-0" and "Controller-1" are actually the independent SCs in the X4370M2. What this shows is that you can lose any of the following components in the diagram and your database will continue to run (assuming RAC is in use):

- Hard Disk

- SSD

- SAS Expander

- SAS Controller (RAID adapter)

Only the loss of an expander would cause the entire SC to come down. In the event of an SSD or hard disk failure (even 2 hard disks), ASM redundancy would keep both database instances online. All hard disks and solid state disks are hot-swappable, so there is no downtime to replace a disk. You would have to power off the SC to replace a SAS controller, but the SC would still have a path to all disks until that maintenance window, leaving you the ability to take it down at your convenience. The unaffected SC would continue to run without issue. This also applies to other hardware failures local to a single SC. The only components shared between the SCs are the SSD, hard disks, and power supplies (all of which are redundant).

How are the disks actually configured at the OS level? Because all of the connections inside the SCs are redundant, the disk devices (and SSD) are configured to use device-mapper for multipathing. The oak configurator automatically configures the /etc/multipath.conf file on each SC after the disks have been partitioned. They are named based on the type of disk (HDD or SSD), expander, slot, and a hash value. Below is an sample of the /etc/multipath.com file:

defaults {

user_friendly_names yes

path_grouping_policy failover

failback manual

no_path_retry fail

flush_on_last_del yes

queue_without_daemon no

}

multipaths {

multipath {

wwid 35000c5003a446893

alias HDD_E0_S01_977561747

mode 660

uid 1000

gid 1006

}

multipath {

wwid 35000c5003a42ad5b

alias HDD_E0_S00_977448283

mode 660

uid 1000

gid 1006

}

This results in the following disks:

[root@patty ~]# ls -al /dev/mapper/*

crw------- 1 root root 10, 63 Dec 5 17:38 /dev/mapper/control

brw-rw---- 1 grid asmadmin 253, 16 Dec 6 09:47 /dev/mapper/HDD_E0_S00_977448283

brw-rw---- 1 grid asmadmin 253, 61 Dec 12 08:59 /dev/mapper/HDD_E0_S00_977448283p1

brw-rw---- 1 grid asmadmin 253, 62 Dec 12 08:59 /dev/mapper/HDD_E0_S00_977448283p2

brw-rw---- 1 grid asmadmin 253, 26 Dec 6 09:47 /dev/mapper/HDD_E0_S01_977561747

brw-rw---- 1 grid asmadmin 253, 29 Dec 12 08:59 /dev/mapper/HDD_E0_S01_977561747p1

brw-rw---- 1 grid asmadmin 253, 30 Dec 12 08:59 /dev/mapper/HDD_E0_S01_977561747p2

brw-rw---- 1 grid asmadmin 253, 17 Dec 6 09:48 /dev/mapper/HDD_E0_S04_975085971

brw-rw---- 1 grid asmadmin 253, 66 Dec 12 08:59 /dev/mapper/HDD_E0_S04_975085971p1

brw-rw---- 1 grid asmadmin 253, 67 Dec 12 08:59 /dev/mapper/HDD_E0_S04_975085971p2

brw-rw---- 1 grid asmadmin 253, 27 Dec 6 09:48 /dev/mapper/HDD_E0_S05_977762435

brw-rw---- 1 grid asmadmin 253, 37 Dec 12 08:59 /dev/mapper/HDD_E0_S05_977762435p1

brw-rw---- 1 grid asmadmin 253, 38 Dec 12 08:59 /dev/mapper/HDD_E0_S05_977762435p2

brw-rw---- 1 grid asmadmin 253, 18 Dec 6 09:48 /dev/mapper/HDD_E0_S08_975084479

brw-rw---- 1 grid asmadmin 253, 68 Dec 12 08:59 /dev/mapper/HDD_E0_S08_975084479p1

brw-rw---- 1 grid asmadmin 253, 69 Dec 12 08:48 /dev/mapper/HDD_E0_S08_975084479p2

brw-rw---- 1 grid asmadmin 253, 24 Dec 6 09:49 /dev/mapper/HDD_E0_S09_977562223

brw-rw---- 1 grid asmadmin 253, 33 Dec 12 08:59 /dev/mapper/HDD_E0_S09_977562223p1

brw-rw---- 1 grid asmadmin 253, 34 Dec 12 08:59 /dev/mapper/HDD_E0_S09_977562223p2

brw-rw---- 1 grid asmadmin 253, 19 Dec 6 09:49 /dev/mapper/HDD_E0_S12_975086927

brw-rw---- 1 grid asmadmin 253, 64 Dec 12 08:59 /dev/mapper/HDD_E0_S12_975086927p1

brw-rw---- 1 grid asmadmin 253, 65 Dec 12 08:34 /dev/mapper/HDD_E0_S12_975086927p2

brw-rw---- 1 grid asmadmin 253, 25 Dec 6 09:50 /dev/mapper/HDD_E0_S13_975069383

brw-rw---- 1 grid asmadmin 253, 35 Dec 12 08:59 /dev/mapper/HDD_E0_S13_975069383p1

brw-rw---- 1 grid asmadmin 253, 36 Dec 12 06:47 /dev/mapper/HDD_E0_S13_975069383p2

brw-rw---- 1 grid asmadmin 253, 20 Dec 6 09:49 /dev/mapper/HDD_E0_S16_977454443

brw-rw---- 1 grid asmadmin 253, 70 Dec 12 08:59 /dev/mapper/HDD_E0_S16_977454443p1

brw-rw---- 1 grid asmadmin 253, 71 Dec 12 08:48 /dev/mapper/HDD_E0_S16_977454443p2

brw-rw---- 1 grid asmadmin 253, 22 Dec 6 09:50 /dev/mapper/HDD_E0_S17_975084035

brw-rw---- 1 grid asmadmin 253, 31 Dec 12 08:59 /dev/mapper/HDD_E0_S17_975084035p1

brw-rw---- 1 grid asmadmin 253, 32 Dec 12 08:20 /dev/mapper/HDD_E0_S17_975084035p2

brw-rw---- 1 grid asmadmin 253, 4 Dec 6 09:50 /dev/mapper/HDD_E1_S02_979821467

brw-rw---- 1 grid asmadmin 253, 41 Dec 12 08:59 /dev/mapper/HDD_E1_S02_979821467p1

brw-rw---- 1 grid asmadmin 253, 42 Dec 12 08:59 /dev/mapper/HDD_E1_S02_979821467p2

brw-rw---- 1 grid asmadmin 253, 14 Dec 6 09:47 /dev/mapper/HDD_E1_S03_977580879

brw-rw---- 1 grid asmadmin 253, 39 Dec 12 08:59 /dev/mapper/HDD_E1_S03_977580879p1

brw-rw---- 1 grid asmadmin 253, 40 Dec 12 08:59 /dev/mapper/HDD_E1_S03_977580879p2

brw-rw---- 1 grid asmadmin 253, 5 Dec 6 09:48 /dev/mapper/HDD_E1_S06_977572915

brw-rw---- 1 grid asmadmin 253, 48 Dec 12 08:59 /dev/mapper/HDD_E1_S06_977572915p1

brw-rw---- 1 grid asmadmin 253, 49 Dec 12 08:00 /dev/mapper/HDD_E1_S06_977572915p2

brw-rw---- 1 grid asmadmin 253, 15 Dec 6 09:48 /dev/mapper/HDD_E1_S07_975059707

brw-rw---- 1 grid asmadmin 253, 59 Dec 12 08:59 /dev/mapper/HDD_E1_S07_975059707p1

brw-rw---- 1 grid asmadmin 253, 60 Dec 12 08:20 /dev/mapper/HDD_E1_S07_975059707p2

brw-rw---- 1 grid asmadmin 253, 6 Dec 6 09:48 /dev/mapper/HDD_E1_S10_977415523

brw-rw---- 1 grid asmadmin 253, 46 Dec 12 08:59 /dev/mapper/HDD_E1_S10_977415523p1

brw-rw---- 1 grid asmadmin 253, 47 Dec 12 08:15 /dev/mapper/HDD_E1_S10_977415523p2

brw-rw---- 1 grid asmadmin 253, 12 Dec 6 09:48 /dev/mapper/HDD_E1_S11_975067919

brw-rw---- 1 grid asmadmin 253, 55 Dec 12 08:59 /dev/mapper/HDD_E1_S11_975067919p1

brw-rw---- 1 grid asmadmin 253, 56 Dec 12 08:15 /dev/mapper/HDD_E1_S11_975067919p2

brw-rw---- 1 grid asmadmin 253, 7 Dec 6 09:49 /dev/mapper/HDD_E1_S14_979772987

brw-rw---- 1 grid asmadmin 253, 44 Dec 12 08:59 /dev/mapper/HDD_E1_S14_979772987p1

brw-rw---- 1 grid asmadmin 253, 45 Dec 12 07:17 /dev/mapper/HDD_E1_S14_979772987p2

brw-rw---- 1 grid asmadmin 253, 13 Dec 6 09:49 /dev/mapper/HDD_E1_S15_975079031

brw-rw---- 1 grid asmadmin 253, 57 Dec 12 08:59 /dev/mapper/HDD_E1_S15_975079031p1

brw-rw---- 1 grid asmadmin 253, 58 Dec 12 08:15 /dev/mapper/HDD_E1_S15_975079031p2

brw-rw---- 1 grid asmadmin 253, 8 Dec 6 09:50 /dev/mapper/HDD_E1_S18_975100927

brw-rw---- 1 grid asmadmin 253, 51 Dec 12 08:59 /dev/mapper/HDD_E1_S18_975100927p1

brw-rw---- 1 grid asmadmin 253, 52 Dec 12 07:04 /dev/mapper/HDD_E1_S18_975100927p2

brw-rw---- 1 grid asmadmin 253, 10 Dec 6 09:50 /dev/mapper/HDD_E1_S19_977425955

brw-rw---- 1 grid asmadmin 253, 53 Dec 12 08:59 /dev/mapper/HDD_E1_S19_977425955p1

brw-rw---- 1 grid asmadmin 253, 54 Dec 12 08:59 /dev/mapper/HDD_E1_S19_977425955p2

brw-rw---- 1 grid asmadmin 253, 21 Dec 6 09:49 /dev/mapper/SSD_E0_S20_805650385

brw-rw---- 1 grid asmadmin 253, 63 Dec 12 08:59 /dev/mapper/SSD_E0_S20_805650385p1

brw-rw---- 1 grid asmadmin 253, 23 Dec 6 09:49 /dev/mapper/SSD_E0_S21_805650582

brw-rw---- 1 grid asmadmin 253, 28 Dec 12 08:59 /dev/mapper/SSD_E0_S21_805650582p1

brw-rw---- 1 grid asmadmin 253, 9 Dec 6 09:50 /dev/mapper/SSD_E1_S22_805650581

brw-rw---- 1 grid asmadmin 253, 43 Dec 12 08:59 /dev/mapper/SSD_E1_S22_805650581p1

brw-rw---- 1 grid asmadmin 253, 11 Dec 6 09:50 /dev/mapper/SSD_E1_S23_805650633

brw-rw---- 1 grid asmadmin 253, 50 Dec 12 08:59 /dev/mapper/SSD_E1_S23_805650633p1

brw-rw---- 1 root disk 253, 2 Dec 5 17:38 /dev/mapper/VolGroupSys-LogVolOpt

brw-rw---- 1 root disk 253, 0 Dec 5 17:38 /dev/mapper/VolGroupSys-LogVolRoot

brw-rw---- 1 root disk 253, 3 Dec 5 17:38 /dev/mapper/VolGroupSys-LogVolSwap

brw-rw---- 1 root disk 253, 1 Dec 5 17:38 /dev/mapper/VolGroupSys-LogVolU01

As you can see, /dev/mapper/HDD_E1_S15_975079031 resides in expander 1, slot 15, and has 2 partitions (p1 and p2). It is these disks that have been defined by device mapper that are added to ASM. One thing that is gleaned from this is that the disks are actually partitioned, which is the opposite of how Oracle's other engineered database system (Exadata) handles disks. While Exadata storage servers use the concept of physicaldisks, celldisks, and griddisks, the ODA goes back to the tried and true method of standard disk partitioning. When configuring the ODA, you are given the choice of whether you would prefer backups to be internal or external to the ODA. Naturally, choosing "internal" makes the RECO diskgroup larger, while the choice of "external" gives about an 85/15 split in favor of the DATA diskgroup.

We chose to install our ODA using the diskgroup sizing for external backups. Here's what the installer created for us:

SQL> @asm_diskgroups

Diskgroup Sector Size AU Size (MB) State Redundancy Size (MB) Free (MB) Usable (MB)

---------- ----------- ------------ ----------- ---------- ------------ ------------ ------------

DATA 512 4 MOUNTED HIGH 9,830,400 9,630,088 3,210,029

RECO 512 4 MOUNTED HIGH 1,616,000 1,246,720 415,573

REDO 512 4 MOUNTED HIGH 280,016 254,844 84,948

There was no choice in the redundancy....ODA uses high redundancy all the way. While it is possible to manually reconfigure the diskgroups to use normal redundancy, remember that losing failure groups in a normal redundancy configuration will take the diskgroup offline. Because Oracle does not include spare disks with the ODA, I recommend keeping high redundancy in the configuration. When we dig deeper into the ASM disk configuration we see that each disk is its own failgroup:

SQL> @asm_disks

Diskgroup Disk Fail Group Size (MB)

---------- ---------------------------------------- ------------------------------ ---------

DATA /dev/mapper/HDD_E0_S00_977448283p1 HDD_E0_S00_977448283P1 491,520

DATA /dev/mapper/HDD_E0_S01_977561747p1 HDD_E0_S01_977561747P1 491,520

DATA /dev/mapper/HDD_E0_S04_975085971p1 HDD_E0_S04_975085971P1 491,520

DATA /dev/mapper/HDD_E0_S05_977762435p1 HDD_E0_S05_977762435P1 491,520

DATA /dev/mapper/HDD_E0_S08_975084479p1 HDD_E0_S08_975084479P1 491,520

DATA /dev/mapper/HDD_E0_S09_977562223p1 HDD_E0_S09_977562223P1 491,520

DATA /dev/mapper/HDD_E0_S12_975086927p1 HDD_E0_S12_975086927P1 491,520

DATA /dev/mapper/HDD_E0_S13_975069383p1 HDD_E0_S13_975069383P1 491,520

DATA /dev/mapper/HDD_E0_S16_977454443p1 HDD_E0_S16_977454443P1 491,520

DATA /dev/mapper/HDD_E0_S17_975084035p1 HDD_E0_S17_975084035P1 491,520

DATA /dev/mapper/HDD_E1_S02_979821467p1 HDD_E1_S02_979821467P1 491,520

DATA /dev/mapper/HDD_E1_S03_977580879p1 HDD_E1_S03_977580879P1 491,520

DATA /dev/mapper/HDD_E1_S06_977572915p1 HDD_E1_S06_977572915P1 491,520

DATA /dev/mapper/HDD_E1_S07_975059707p1 HDD_E1_S07_975059707P1 491,520

DATA /dev/mapper/HDD_E1_S10_977415523p1 HDD_E1_S10_977415523P1 491,520

DATA /dev/mapper/HDD_E1_S11_975067919p1 HDD_E1_S11_975067919P1 491,520

DATA /dev/mapper/HDD_E1_S14_979772987p1 HDD_E1_S14_979772987P1 491,520

DATA /dev/mapper/HDD_E1_S15_975079031p1 HDD_E1_S15_975079031P1 491,520

DATA /dev/mapper/HDD_E1_S18_975100927p1 HDD_E1_S18_975100927P1 491,520

DATA /dev/mapper/HDD_E1_S19_977425955p1 HDD_E1_S19_977425955P1 491,520

RECO /dev/mapper/HDD_E0_S00_977448283p2 HDD_E0_S00_977448283P2 80,800

RECO /dev/mapper/HDD_E0_S01_977561747p2 HDD_E0_S01_977561747P2 80,800

RECO /dev/mapper/HDD_E0_S04_975085971p2 HDD_E0_S04_975085971P2 80,800

RECO /dev/mapper/HDD_E0_S05_977762435p2 HDD_E0_S05_977762435P2 80,800

RECO /dev/mapper/HDD_E0_S08_975084479p2 HDD_E0_S08_975084479P2 80,800

RECO /dev/mapper/HDD_E0_S09_977562223p2 HDD_E0_S09_977562223P2 80,800

RECO /dev/mapper/HDD_E0_S12_975086927p2 HDD_E0_S12_975086927P2 80,800

RECO /dev/mapper/HDD_E0_S13_975069383p2 HDD_E0_S13_975069383P2 80,800

RECO /dev/mapper/HDD_E0_S16_977454443p2 HDD_E0_S16_977454443P2 80,800

RECO /dev/mapper/HDD_E0_S17_975084035p2 HDD_E0_S17_975084035P2 80,800

RECO /dev/mapper/HDD_E1_S02_979821467p2 HDD_E1_S02_979821467P2 80,800

RECO /dev/mapper/HDD_E1_S03_977580879p2 HDD_E1_S03_977580879P2 80,800

RECO /dev/mapper/HDD_E1_S06_977572915p2 HDD_E1_S06_977572915P2 80,800

RECO /dev/mapper/HDD_E1_S07_975059707p2 HDD_E1_S07_975059707P2 80,800

RECO /dev/mapper/HDD_E1_S10_977415523p2 HDD_E1_S10_977415523P2 80,800

RECO /dev/mapper/HDD_E1_S11_975067919p2 HDD_E1_S11_975067919P2 80,800

RECO /dev/mapper/HDD_E1_S14_979772987p2 HDD_E1_S14_979772987P2 80,800

RECO /dev/mapper/HDD_E1_S15_975079031p2 HDD_E1_S15_975079031P2 80,800

RECO /dev/mapper/HDD_E1_S18_975100927p2 HDD_E1_S18_975100927P2 80,800

RECO /dev/mapper/HDD_E1_S19_977425955p2 HDD_E1_S19_977425955P2 80,800

REDO /dev/mapper/SSD_E0_S20_805650385p1 SSD_E0_S20_805650385P1 70,004

REDO /dev/mapper/SSD_E0_S21_805650582p1 SSD_E0_S21_805650582P1 70,004

REDO /dev/mapper/SSD_E1_S22_805650581p1 SSD_E1_S22_805650581P1 70,004

REDO /dev/mapper/SSD_E1_S23_805650633p1 SSD_E1_S23_805650633P1 70,004

44 rows selected.

One other thing that I found surprising was that the sector size listed for the SSD was 512, not the 4096 that I expected. Normally, flash media has a 4k block size. Looking at the attributes for the ASM diskgroups, there isn't anything special on the ODA:

SQL> @asm_attributes

Diskgroup Attribute Value

---------- ------------------------------ --------------------

DATA access_control.enabled FALSE

DATA access_control.umask 066

DATA au_size 4194304

DATA cell.smart_scan_capable FALSE

DATA compatible.asm 11.2.0.2.0

DATA compatible.rdbms 11.2.0.2.0

DATA disk_repair_time 3.6h

DATA idp.boundary auto

DATA idp.type dynamic

DATA sector_size 512

RECO access_control.enabled FALSE

RECO access_control.umask 066

RECO au_size 4194304

RECO cell.smart_scan_capable FALSE

RECO compatible.advm 11.2.0.0.0

RECO compatible.asm 11.2.0.2.0

RECO compatible.rdbms 11.2.0.2.0

RECO disk_repair_time 3.6h

RECO idp.boundary auto

RECO idp.type dynamic

RECO sector_size 512

REDO access_control.enabled FALSE

REDO access_control.umask 066

REDO au_size 4194304

REDO cell.smart_scan_capable FALSE

REDO compatible.asm 11.2.0.2.0

REDO compatible.rdbms 11.2.0.2.0

REDO disk_repair_time 3.6h

REDO idp.boundary auto

REDO idp.type dynamic

REDO sector_size 512

31 rows selected.

The compatible parameters are set to 11.2.0.2, and cell.smart_scan_capable is set to false. From this point, the ODA is just a standard RAC system that has direct-attached shared storage.

That's about it for now. I'll be back soon with another post surrounding the usage of the new Oracle Appliance Kit (oakcli) that is used to manage storage and other components of the ODA.

Hello, in connection with your comment about the block size for the redo group created from SSD drives, can you list the init.ora parameters of the database? Redo log can be forced to use 4k block with a hidden parameter I think.

Stanislav,

Are you asking about the _disk_sector_size_override parameter in ASM? If so, that is currently set to true, but the sector sizes for each of the diskgroups is 512.

any chance that you will be doing a blog post on the Oracle Database Appliance X3-2?