For some reason, I've been working on lots of Exadata V2 systems in the past few months. One of the issues that I've been coming across for these clients is a failure in the battery that is used by the RAID controller. It was originally expected for these batteries to last 2 years. Unfortunately, there is a defect in the batteries where they reach their end of life after approximately 18 months. The local Sun reps should have access to a schedule that says when the "regular maintenance" should occur. For one client, it wasn't caught until the batteries had run down completely and the disks were in WriteThrough mode. This can be seen by running MegaCLI64. Here is the output to check the WriteBack/WriteThrough status for 2 different compute nodes (V2 is first, X2-2 is second):

[enkdb01:root] /root

> dmidecode -s system-product-name

SUN FIRE X4170 SERVER

[enkdb01:root] /root

> /opt/MegaRAID/MegaCli/MegaCli64 -LDInfo -LALL -aALL | grep "Cache Policy"

Default Cache Policy: WriteBack, ReadAheadNone, Direct, No Write Cache if Bad BBU

Current Cache Policy: WriteThrough, ReadAheadNone, Direct, No Write Cache if Bad BBU

Disk Cache Policy : Disabled

[root@enkdb03 ~]# dmidecode -s system-product-name

SUN FIRE X4170 M2 SERVER

[root@enkdb03 ~]# /opt/MegaRAID/MegaCli/MegaCli64 -LDInfo -LALL -aALL | grep "Cache Policy"

Default Cache Policy: WriteBack, ReadAheadNone, Direct, No Write Cache if Bad BBU

Current Cache Policy: WriteBack, ReadAheadNone, Direct, No Write Cache if Bad BBU

Disk Cache Policy : Disabled

If you have a V2 and you haven't replaced the batteries yet, it's worth running these commands to see what state your RAID controllers are in. To find out what this means for you, read on after the break.

As you can see, on the V2 (X4170), the current cache policy is set to WriteThrough mode, while the X2 (X4170 M2) is still running in WriteBack mode. What's the difference? According to the interwebs (http://goo.gl/6LyVK):

RAID Features

Write Through CacheWith Write Through Cache the data is written to both the cache and drive once the data is retrieved. As the data is written to both places, should the information be required it can be retrieved from the cache for faster access. The downside of this method is that the time to carry out a Write operation is greater the time to do a Write to a non cache device. The total Write time is the time to write to the cache plus the time to Write the disk.

Write Back Cache

With Write Back Cache the write operation does not suffer from the Write time delay. The block of data is initially written to the cache, only when the cache is full or required is the data written to the disk.

The limitation of this method is that the storage device for a period of time does not contain the new or updated block of data. If the data in the cache is lost due to power failure the data cannot be recovered. When using Write Back Cache a battery backup module would prevent data loss in a RAID power failure.

We obviously have a problem on the V2 system, since it's running in WriteThrough mode. Why is this? The first thing to check is the status of the battery. From the Exadata setup/configuration best practices note (#1274318.1), we see the command that can be run to check the battery condition. From the note:

Proactive battery replacement should be performed within 60 days for any batteries that do not meet the following criteria:

1) "Full Charge Capacity" less than or equal to 800 mAh and "Max Error" less than 10%.

Immediately replace any batteries that do not meet the following criteria:

1) "Max Error" is 10% or greater (battery deemed unreliable regardless of "Full Charge Capacity" reading)

2) "Full Charge Capacity" less than 674 mAh regardless of "Max Error" reading

Let's run the command on our X2 and see how it looks:

[root@enkdb03 ~]# /opt/MegaRAID/MegaCli/MegaCli64 -AdpBbuCmd -a0 | grep "Full Charge" -A5 | sort | grep Full -A1

Full Charge Capacity: 1358 mAh

Max Error: 0 %

That looks great....just what we should expect. The "Full Charge Capacity" is well over 800 mAh threshold. Now, let's look at the system that's in WriteThrough mode:

[enkdb01:root] /root

> /opt/MegaRAID/MegaCli/MegaCli64 -AdpBbuCmd -a0 | grep "Full Charge" -A5 | sort | grep Full -A1

Full Charge Capacity: 562 mAh

Max Error: 2 %

That's not good. Full charge capacity is below the dreaded "immediate replacement" level. Looks like we need to get the batteries replaced. The process of replacing the batteries is very straightforward. The node (cell or compute) has to be powered off, opened up, and the old battery is unplugged from the LSI disk controller, and the new battery is connected. Repeat for each of your servers. No outage is required...the batteries can be replaced in a rolling fashion. After a few hours, the new battery is charged, and the disks return to WriteBack mode. One caveat is that the LSI firmware image included in Exadata storage servers previous to 11.2.2.1.1 do not recognize the new batteries. Most V2 Exadata systems shipped with version 11.2.1.3.1 or older. This means that if you're running a V2 and haven't patched yet, it's time to start looking at getting the system up to date. If you stay on an older version and choose to replace the batteries, you will most likely see no benefit. All the more reason to keep you Exadata up to date.

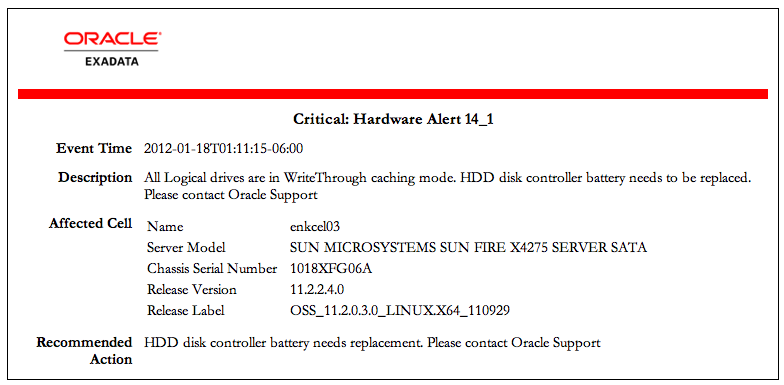

One thing that I was curious about was how the battery degradation was missed. Exadata systems run a periodic check of the battery that will force it down to no charge, and allow it to be charged up. If you own an Exadata system, you have most likely seen the quarterly (formerly monthly) alerts that all drives are in WriteThrough mode. After a few cycles, it's easy to become desensitized to these messages and just delete them. It's imperative that you ensure that you also receive a message that the disks have returned to WriteBack mode. If you don't receive this, then your batteries may need to be replaced. Two message should be received for each storage server - one that the disks are in WriteThrough mode, and one that they've returned to WriteBack mode. The messages should look like this:

Unfortunately, it's not quite good enough to wait for the all clear messages to come flooding your mailbox. The compute nodes do not send these messages, which means that you could be in WriteThrough mode without being notified. While it's not as critical as it is on the storage servers, running in WriteThrough mode will show some performance degradation when running operations against the local disks (trace files, logs, local batch jobs, etc). To resolve this, I suggest running Exachk (MOS note #1070954.1) at least once a quarter. It will help diagnose anything that may have gone sideways in your Exadata environment.

Happy replacing!

Hi Andy,

This is all very familiar in our V2 environments. Have replaced many batteries. There is a check to show the battery version, These V2’s shipped with BBU 7, which although is meant to last 2 years before the preventative maintenance swaps them out, seem to last around 18 months or so. They get replaced with BBU 8 which I’ve been told may last a bit longer.

Have had over 50 batteries replaced this year in V2’s. When they start going, lots of them tend to go together!

I’ve seen issues, and been told by Oracle it’s quite important to ensure the compute nodes are in writeback.

regards,

Jason

Andy,

I think you saved my day.

We have been struggling recently with a performance issue we could not get through.

Turns out that the exact time when the cache policy on a compute node changed from “WriteBack” to “WriteThrough” matches exactly with the beginning of the problems.

I wil report back as soon as the batteries are changed…

As per now, a big THANK YOU

Andrea

Pingback: Exadata Batteries « jarneil

We had our batteries changed, and our problems solved.

The issue started with TRUNCATEs lasting for tens of minutes. These were accompanied by “local write wait” and “enq: RO – fast object reuse” events. “log file sync” and “log file parallel write” were also quite unusual.

All clues pointed in the direction that we were facing slow writes, but without your blog post we’d probably be still out there in the dark…

Once again, THANK YOU

Andrea

Pingback: Exadata Batteries | Oracle Administrators Blog - by Aman Sood

Pingback: Exadata Batteries « oracle fusion identity

We executed following commands on compute node and have a problem:

#/opt/MegaRAID/MegaCli/MegaCli64 -LDInfo -LALL -aALL | grep “Cache Policy”

Default Cache Policy: WriteBack, ReadAheadNone, Direct, No Write Cache if Bad BBU

Current Cache Policy: WriteThrough, ReadAheadNone, Direct, No Write Cache if Bad BBU

Disk Cache Policy : Disabled

#/opt/MegaRAID/MegaCli/MegaCli64 -AdpBbuCmd -a0 | grep “Full Charge” -A5 | sort | grep Full -A1

#

We did’t receive any message/information from node, as there is no battery in the node.

Is that normal?

Regards,

Roman

Roman,

Was this battery replaced? If so, what version of the Exadata software are you on? You can run imageinfo to find out.

Andy

Andy,

No, I am talking about year and half old Exadata X2-2 original configured system.

We didn’t replace the battery, yet.

The Exadata SW version is:

Kernel version: 2.6.18-238.12.2.0.2.el5 #1 SMP Tue Jun 28 05:21:19 EDT 2011 x86_64

Image version: 11.2.2.4.2.111221

Image activated: 2012-01-29 16:33:51 +0100

Image status: success

System partition on device: /dev/mapper/VGExaDb-LVDbSys1

Roman

Is there any down time required of db and storage nodes in X2-2 quater rack during the raid controller batteries.

In order to replace the battery, the host must be shut down. It’s required in order to remove the controller. This can be done in a rolling fashion, so there is no complete outage of the cluster.